Collaborative Visual SLAM using Compressed Feature Exchange

IEEE Robotics and Automation Letters

Munich, Germany, January 2019, Preprint LMT, IEEE

Abstract:

In the field of robotics, collaborative Simultaneous Localization and Mapping (SLAM) is still a challenging problem. The exploration of unknown large-scale environments benefits from sharing the work among multiple agents possibly equipped with different abilities, such as aerial or ground-based vehicles. In this letter, we specifically address data-efficiency for the exchange of visual information in a collaborative visual SLAM setup. For efficient data exchange, we extend a compression scheme for local binary features by two additional modes providing support for local features with additional depth information and an inter-view coding mode exploiting the spatial relations between views of a stereo camera system. To demonstrate the coding framework, we use a centralized system architecture based on ORB-SLAM2, where energy constrained agents extract local binary features and send a compressed version over a network to a more powerful agent, which is capable of running several visual SLAM instances in parallel. We exploit the information from other agents by detecting overlap between already mapped areas and subsequent merging of the maps. Henceforth, the participants contribute to a joint representation and benefit from shared map information. We show a reduction in terms of data-rate by 70.8 % for the feature compression and a reduction in absolute trajectory error by 53.7 % using the collaborative mapping strategy on the well-known KITTI dataset. For the benefit of the community, we provide a public version of the source code.

In addition, we propose to use the information from nearby agents by detecting overlap between already mapped areas and subsequent merging of the maps. Henceforth, the participants contribute to a joint global map representation and benefit from shared map information. To this end, we extend a compression scheme for local binary features by exploiting the spatial relations between views obtained by a stereo camera system and implement a collaborative mapping scheme. We demonstrate the effectiveness of both the feature compression approach and the collaborative mapping strategy on the well-known KITTI dataset.

Approach:

The approach is summarized in the following:

- We extend our feature coding framework [1] to support depth value coding

- We complete the feature coding framework by supporting multi-view coding

- We demonstrate the compression in a centralized collabortive visual SLAM architecture.

Our system is based on ORB-SLAM2 [2] and we evaluated our approach on the KITTI dataset [3] and the Euroc dataset [4]. Our feature coding framework is based on [1] and adds a depth value and a stereo coding mode.

Video:

Supplementary Material:

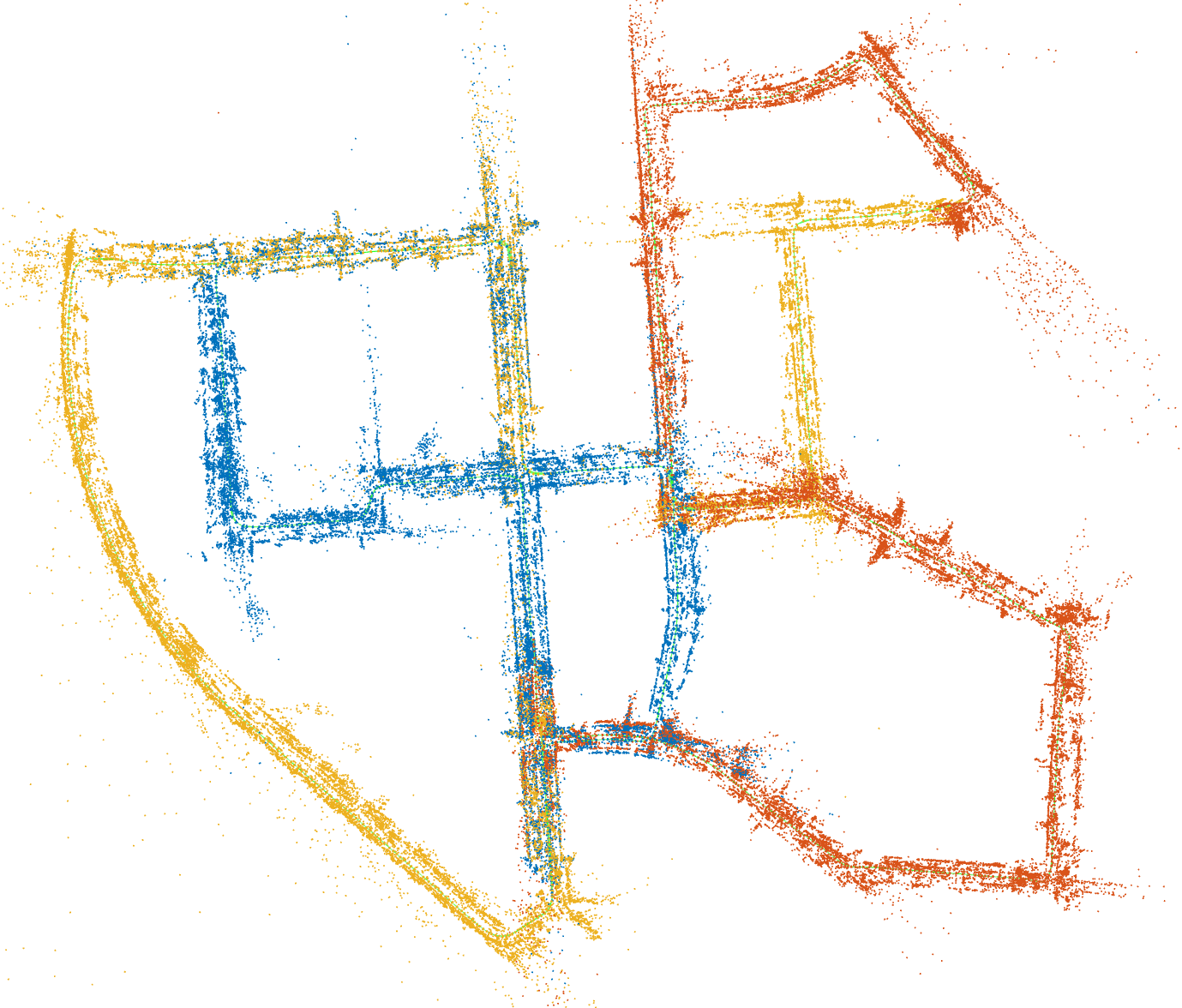

Here, we show additional material not included in the paper. First, we show the resulting trajectories of our experiment by splitting KITTI 00 into three disjunct parts. The ground truth is shown alongside the trajectory estimated by our collaborative approach.

|

|

|

|

|

|

Next, we show the evaluation of our feature coding framework on the Euroc dataset V101 sequence. First we evaluate the sequence with the I+S+M+P and one reference frame for the P mode. For the left view, we added the costs for coding the depth information, as used for the monocular version.

|

|

|

|

|

|

Next, we evaluate the sequence with the I+S+M+P and four reference frames for the P mode. Again, the left view has additionally the depth values included.

|

|

|

|

|

|

Next, timings are provided for the Euroc dataset V101 sequence:

| Stereo | I | I+M | I+P1+S | I+P1+S+M | I+P4+S+M |

| ORB | 14.5 ms | ||||

| encoding | 11.5 ms | 12.8 ms | 15.6 ms | 16.4 ms | 26.3 ms |

| decoding | 12.6 ms | 13.3 ms | 13.8 ms | 13.9 ms | 13.5 ms |

| bits/feature | 224.5 | 208.9 | 170.4 | 165.3 | 151.5 |

| #features | 2x1.2k | 2x1.2k | 2x1.2k | 2x1.2k | 2x1.2k |

Next, we present the results for Mono+Depth

| Mono+Depth | I+D | I+P1+S+D | I+P4+S+D |

| encoding | 7.7 ms | 9.8 ms | 13.4 ms |

| decoding | 8.6 ms | 8.9 ms | 8.9 ms |

| bits/feature | 230.1 | 175.8 | 160.1 |

| #features | 1.2k | 1.2k | 1.2k |

When changing from a single to four reference frames, the inter-coding mode is used more frequently (compare figures c+d). The encoding time increases from 16.4 ms to 26.3 ms. The measurements were performed on an Intel i7-7700 CPU @ 3.60GHz, with 1200 features per frame (ORB-SLAM2 default settings).

Citation:

If you refer to our map compression approach in an academic work, please cite:

@article{VanOpdenbosch2019,

author = {{Van Opdenbosch}, Dominik and Steinbach, Eckehard},

journal = {IEEE Robotics and Automation Letters (RAL)},

number = {1},

pages = {57--64},

title = {{Collaborative Visual SLAM using Compressed Feature Exchange}},

volume = {4},

year = {2019}

}

Preprint is available online at IEEE here

Source Code:

The source code for the collaborative visual SLAM system can be found here.

The binary feature compression pipeline can be found here.

Please contact us via dominik (dot) van-opdenbosch [at] tum (dot) de using “RAL2018” as subject.

Knwon Issues:

The two images in Figure 5 are mixed up.

References:

- D. Van Opdenbosch, M. Oelsch, A. Garcea, T. Aykut, E. Steinbach, Selection and Compression of Local Binary Features for Remote Visual SLAM, IEEE International Conference on Robotics and Automation (ICRA), 2018

- R. Mur-Artal and J. D. Tardós, ORB-SLAM2: an Open-Source SLAM System for Monocular, Stereo and RGB-D Cameras, IEEE Transactions on Robotics, vol. 33, no. 5, pp. 1255-1262, 2017

- A. Geiger, P. Lenz, R. Urtasun, Are we ready for autonomous driving? The KITTI vision benchmark suite, IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3354-3361, 2012

- M. Burri, J. Nikolic, P. Gohl, T. Schneider, J. Rehder, S. Omari, M. Achtelik and R. Siegwart, The EuRoC micro aerial vehicle datasets, International Journal of Robotic Research, vol. 35, no. 10, pp 1157-1163, 2016

Return to main page.